Yoav Artzi on X: "BERT fine-tuning is typically done without the bias correction in the ADAM algorithm. Applying this bias correction significantly stabilizes the fine-tuning process. https://t.co/UJj0im0Avt" / X

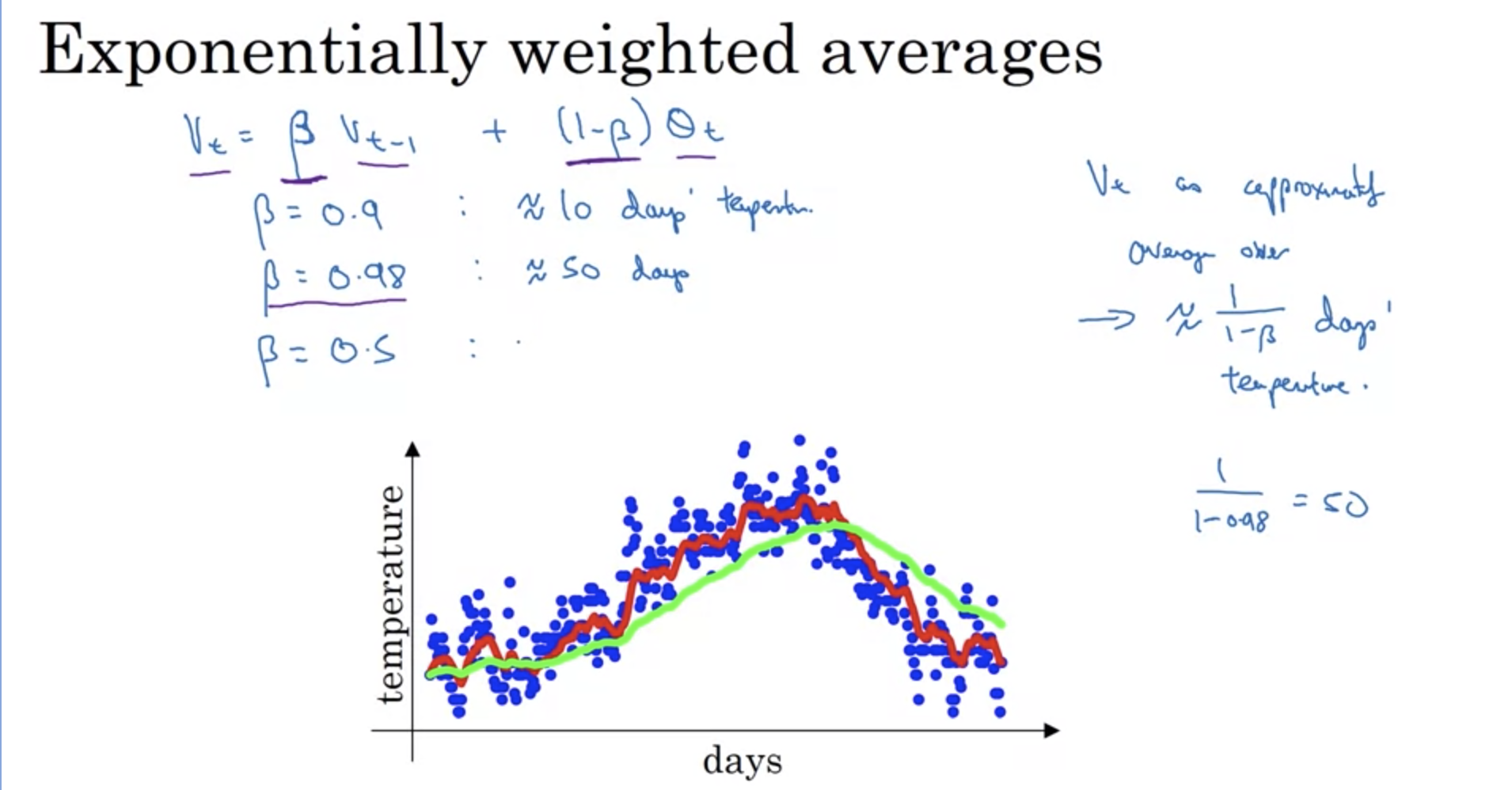

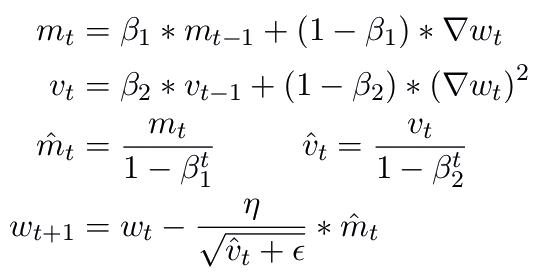

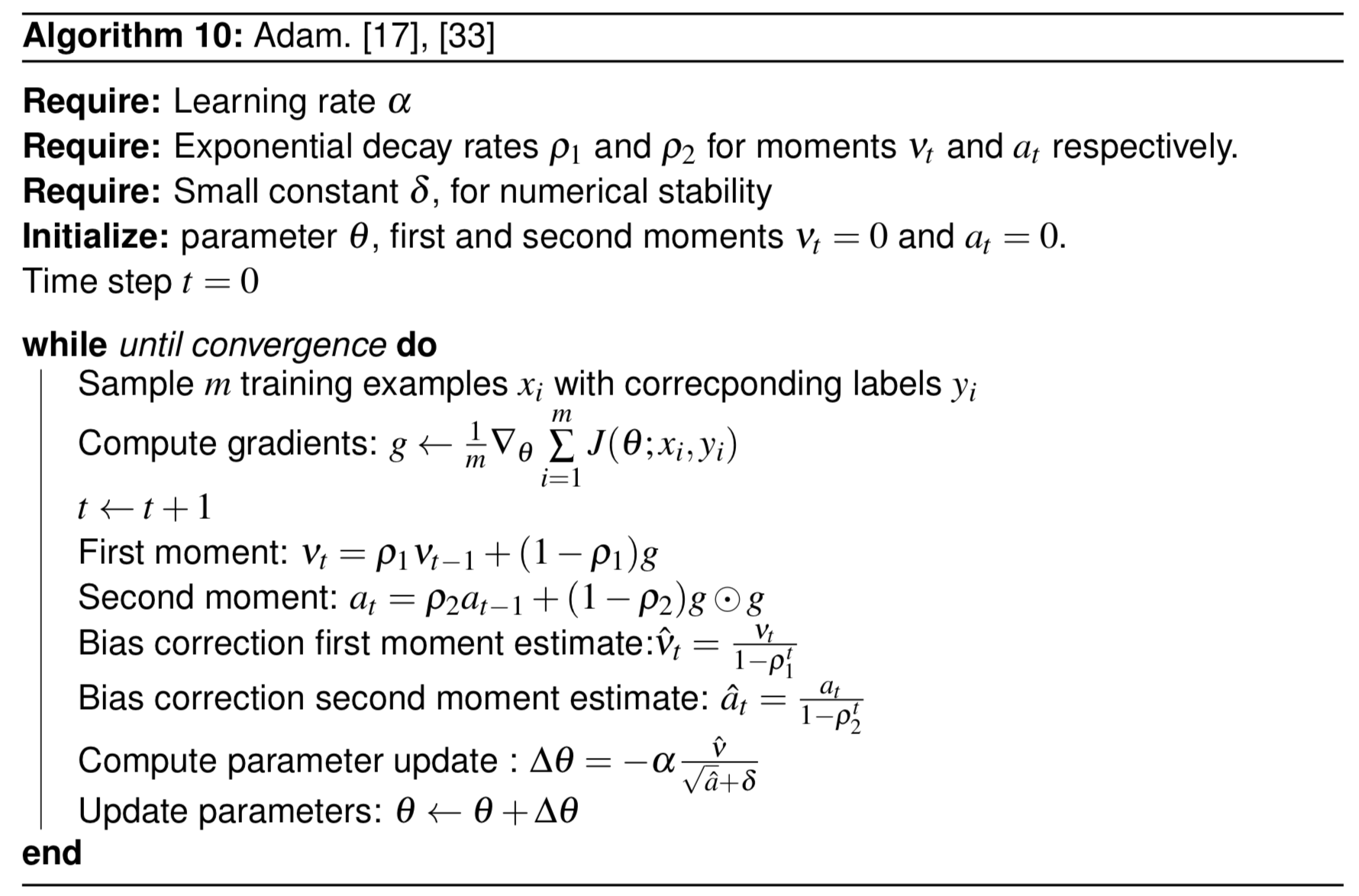

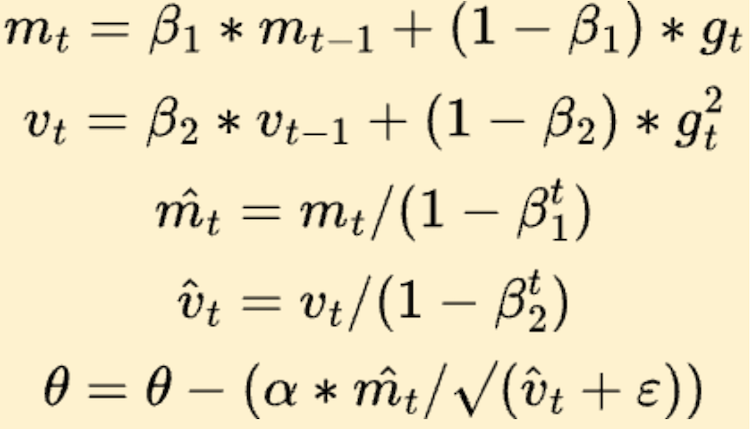

optimization - Understanding a derivation of bias correction for the Adam optimizer - Cross Validated

Add option to exclude first moment bias-correction in Adam/Adamw/other Adam variants. · Issue #67105 · pytorch/pytorch · GitHub

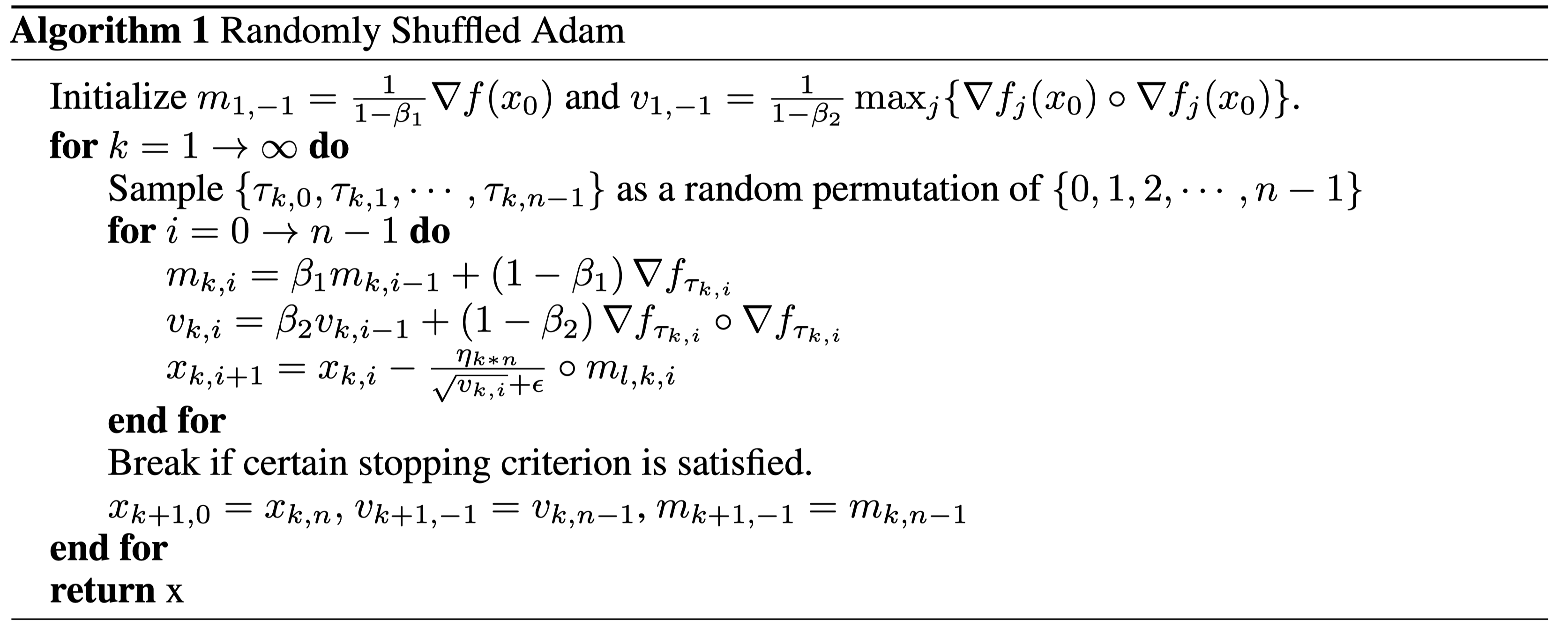

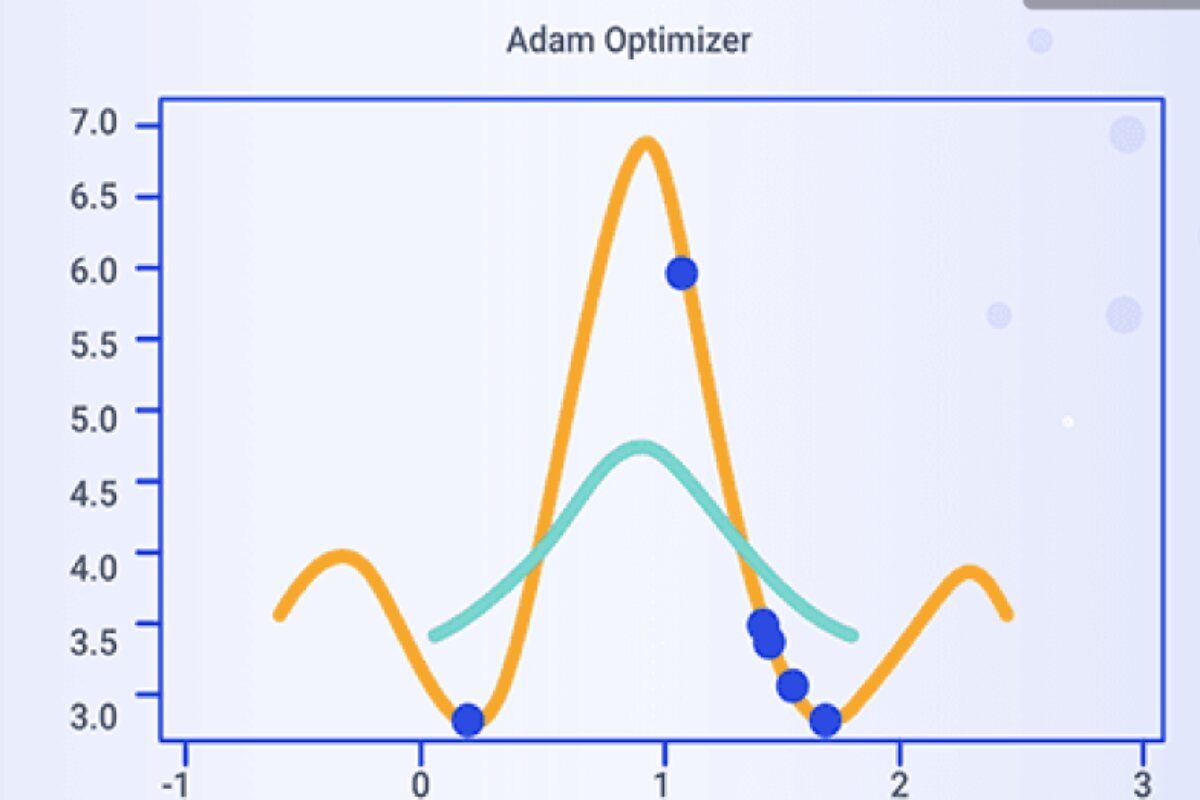

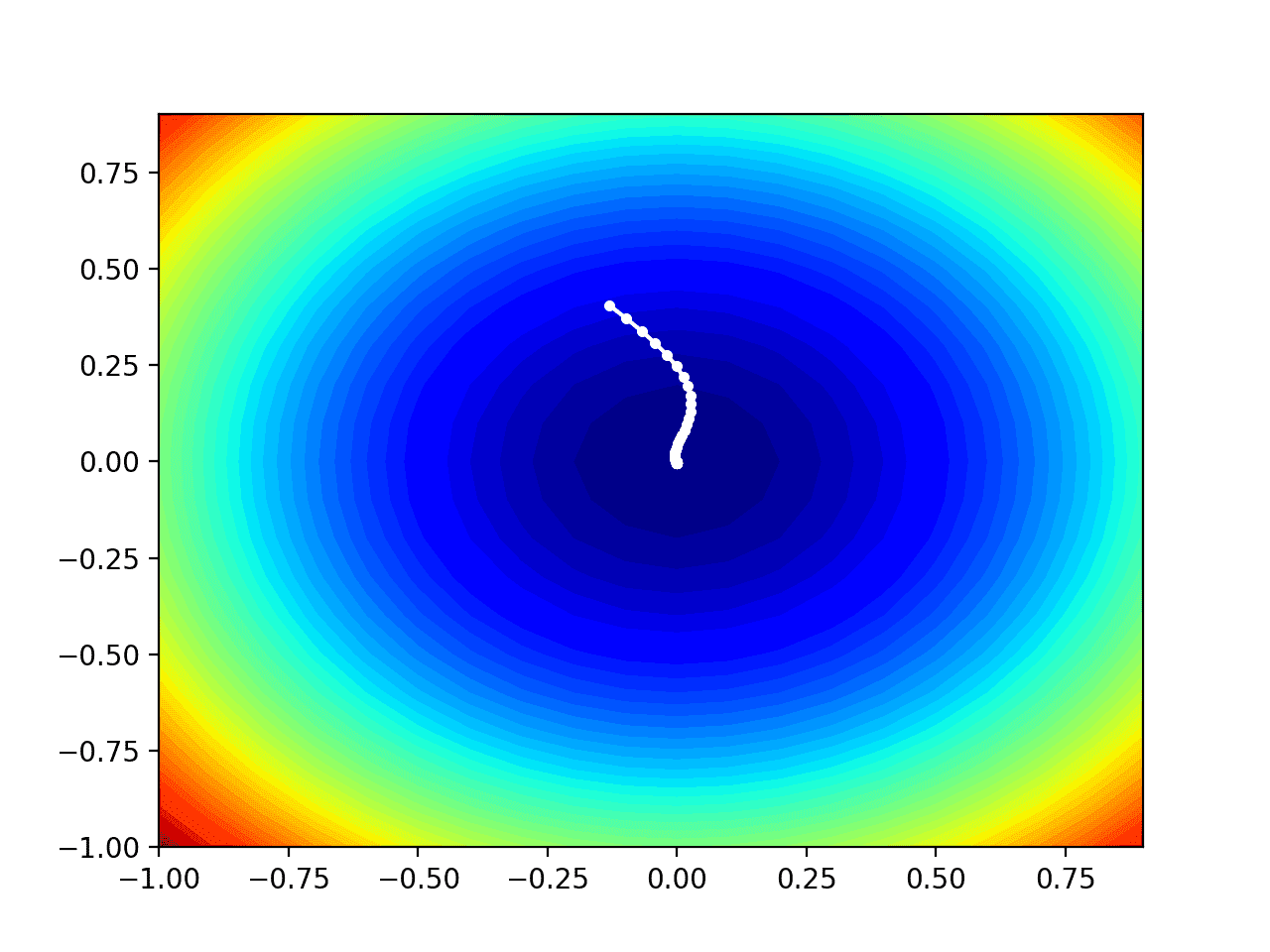

![PDF] AdamD: Improved bias-correction in Adam | Semantic Scholar PDF] AdamD: Improved bias-correction in Adam | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c2662720fa449785d2e495458aad582a5a02cbec/2-Figure1-1.png)